In this post we are going to walk through a proposal for a new mechanism for structuring on-demand work. For those new to this topic let me set the scene before we dive deep. On-demand work is work done by humans paid by the task, like per ride for Uber’s drivers, or per judgement for Facebook’s content moderators. These tasks fill what is called the last mile of AI, the gap between what AI can actually do and what makes it useful in the world. This gap is filled by humans performing tasks pumped out by inflexible API’s and completed via interfaces which preference speed above all else. These systems, of which MTurk is just one example, regard the workers integral to their function as replaceable and not worth investing in. This disregard leads to traumatic results. One woman on her last day of her training before moderating Facebook content full time began “to cry so hard that she [had] trouble breathing” after exposure to one post. 1

So how can we organize an on-demand work platform which fills the last mile of AI while also baking in valuing the human capital, mental health, and effort of individual contributors?[enf_note]For a more introduction from the data economics standpoint with user examples check out the talk Vi and I gave at RadicalXChange[/enf_note]

Let start by looking at the research on the current system. In Mary L. Gray and Siddharth Suri’s recent book Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass, the authors point to several pain points for on-demand workers. Here I want to focus on a possible mechanism for dealing with three such speed bumps: hypervigilance in workers, lack of team structures, no feedback systems, unskilled Task Requesters, and the struggle to hire the right worker for the task.

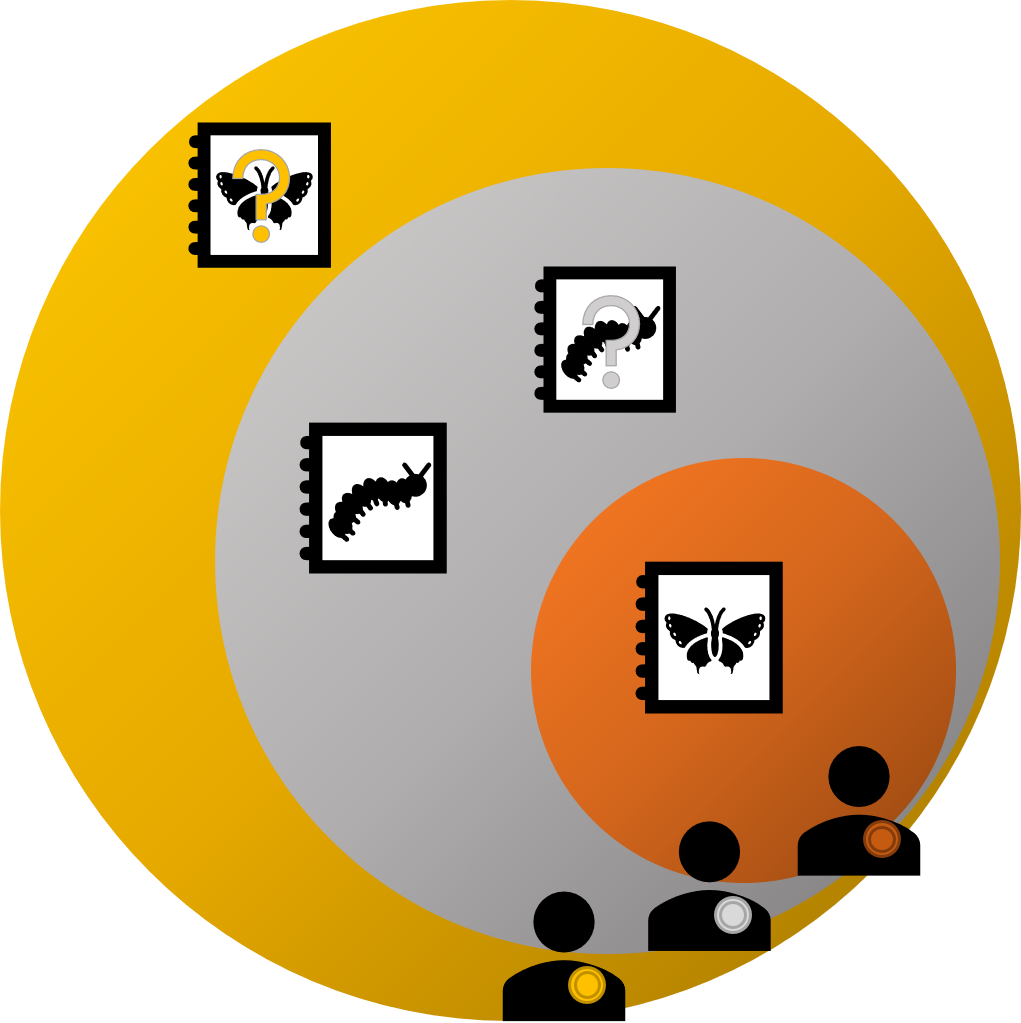

Lets start by creating a new structure for Task Requesters, the people and companies designing task generating APIs. Current on-demand platform tend to treat all Task Requesters as equal. Instead we are going to divide our Requesters in to caterpillars and butterflies.

Those 2 types of Task Requesters interface with 3 types of Workers. Those new to on-demand work start out at the bronze level and as they gain skills and experience have the option to take on the higher responsibility ranks of silver and gold.

This skills-ramp will come in handy for everything from building trust with Task Requesters to structuring on-demand teams. But first lets think about how these two groups of ranked contributors interact via a system of typed tasks. The 4 task types include Butterfly Tasks, Caterpillar Tasks, Feedback Tasks, and Conflict Tasks.

Caterpillar Tasks are akin to what today is a standard task on an on-demand platform:”Click all the images with sidewalks” or “Are these two pictures of the same person?” or “Does this flagged video meet our guidelines?” But here we are making a distinction because these tasks are created by unskilled Task Requesters. In order to improve the quality of these tasks every time a Caterpillar Task is generated a Feedback Task is generated automatically by the platform.

-

-

-

-

-

-

- Feedback Tasks are designed to help Caterpillar Requesters both improve the quality of their tasks and assure compensation matches labor expectations in order to improve ease of completion and wages for workers of all levels.

- Caterpillar Requesters are charged a higher per task % fee to pay for the work of Silver and Gold Role Workers giving feedback.

- Feedback is given in the form of marked up versions of the Caterpillar Requesters original task along side the original task completed as instructed.

- Caterpillar Requesters gain points for every task posted which meets platform standards and is approved without need for changes. These points accrue to grant Butterfly status.

- Butterfly Requesters can voluntarily submit Feedback Tasks when working on a new kind of task in order to gain new expertise and prevent a large number of more expensive Conflict Tasks being generated.

-

-

-

-

-

Butterfly Tasks are similar in content to Caterpillar Tasks but are generated by trusted contributors. However just because these tasks are more likely to be of high quality we need to insure both Workers and Task Requesters have access to fair conflict resolution in when disagreements over completion quality arise.

- Conflict Tasks are designed to evaluate finished tasks Butterfly Requesters have rejected due to poor quality or incompleteness.

- Each Conflict Task is evaluated by a group of Gold Role Workers and the aggregate of their decisions acts as the final decision2.

- Workers whose work had been rejected are still paid the full value of the original task3.

Rewarding Requesters

As well as facilitating this ongoing feedback loop 4 to insure Requester’s stay committed to high quality postings, once a Requester has gained Butterfly status they are also listed on a public leader board. Nicole Immorlica’s work in mechanisms for maximizing social good in non-monetary systems shows that a leader board with a cutoff is the best method for incentivizing quality participation in this type of context.

Rank is based on points awarded. Requesters gain points in several ways:

- Caterpillar Requesters gain points for every task posted which meets platform standards and is approved without need for changes.

- Each Butterfly Task is awarded points out of 10 by the worker that completes the task. Individual reviews are kept private from the requester but are reflected in aggregate in the Requester’s ranking.

- Repeatedly generating Conflict Tasks and being found at greater than 50% fault loses a requester points.

Matching Tasks to Workers

Using these components we can structure a system which both makes on-boarding easier for new workers while also making it easier for Task Requesters to hire skilled worker for more complex tasks.

- Tasks are gated by worker level to insure less experienced workers still learning to preform tasks effectively have access to only the quality-controlled Butterfly Tasks.

- Workers gain level by completing tasks and avoiding repeated poor quality judgements from Conflict Tasks.

- Higher level workers may choose to take on managerial tasks and/or tasks gated by Task Requesters to be completed only by experienced workers.

- An example of a higher-level gated task would be asking a worker to improve a model via a Machine Teaching method instead of a less involved labeling method.

Flash Teams

Whether you are one of the growing cohort of on-demand workers, from ride-sharing drivers to data workers, or live in a neighborhood racked by one of the now frequent climate disasters, hurricanes to wildfires, success is dependent on creating ad-hoc teams which can change dynamically in response to shifting conditions. And while this initial formulation only includes 3 roles with a linear progression between them this system could easily be expanded to allow for the creation of on-demand teams with role or level gated tasks. This section is a brief introduction to Flash Teams based on the work of a group of Stanford Researchers titled Flash Organizations: Crowdsourcing Complex Work By Structuring Crowds As Organizations

- With this tiered system of workers by experience level the next step to create cross tier role-based skill groups. For example Workers can tag themselves with their experience areas such as Interface Design or Software Testing making it easier to find the right person for a particular role and and experience level within a team.

- Because we know that around 20% of workers do a majority of the work on on-demand platforms5these teams would benefit both from “asset specificity, the value that comes from people working together over time,” and “role structures, which are activity-based positions that can be assumed by anyone with necessary training.”6

Conclusion

This early idea prototype focuses on how tiered responsibility on both the workers and Task Requester side, when combined with clearly routed feedback system, may lead to the elimination of many of the pain point both groups currently experience in on-demand platforms, as well as showing how this simplified example could be extended into a broader and more powerful ad-hoc team building system.

- The Trauma Floor, The secret lives of Facebook moderators in America, By Casey Newton, The Verge

- So if 10 Gold Role Workers are required to review each Conflict Task and a particular task is judged by 80% of them to be Worker Fault and 20% to be Requester fault the task will receive an 80% grade. The worker receives full pay and feedback. The Butterfly Requester gets feedback and pays 20% of the task price plus an arbitration fee of 10%.

- Workers can make up to $MP in mistake pay before they are banned from the platform. $MP = %90 of initial payout amount. So if the platform requires you to make $100 before you can get a pay out then $MP = $90. Mistake pay is allotted per number of tasks completed or percentage of total pay over a time period to make sure workers who have been on the platform longer are not punished for mistakes unfairly.

- along with the financial stick covered in footnote 3

- Jesse Chandler, Pam Mueller, and Gabriele Paolacci, “Nonnaivete Among Amazon Mechanical Turk Workers: Consequesces and Socutions for Behavioral Researchers,” Behavior Reserach Methods 46, no.1 (March 2014): 112-30, https://doi.org/10.3758/s13428-013-0365-7

- Melissa A. Valentine, Daniela Retelny, Alexandra To, Negar Rahmati, Tulsee Doshi, Michael S. Bernstein, Flash Organizations: Crowdsourcing Complex Work By Structuring Crowds As Organizations