I’ve been imagining again. This time it’s a way of working with nested scripting techniques for incorporating AI and other algorithms into a simple spatial code. There are a lot of ideas in this one, ideas I do not have full clarity on as research is still in progress. If you’re a reader of this blog you might remember this piece I wrote in which I conceptualized a VR interface for collaborating with generative machine learning algorithms in order to simultaneously recognize and generate example of hand gestures (eg. a wave). There are few things more fundamental to my research process then collage and prototyping so building on that foundation here we will explore a method for collaging this prototype with a simplified tree of branching if statements using a “gestural” programming block.

First let me set some assumptions. The end user programming techniques I am imagining here are happening within a Social Mixed Reality Developer Platform. This is a software/place which:

- Is a tool for creative collaboration. Being together and making things is crucial to how our team works but more importantly it’s how new ideas get shaped and solidified in the world.

- Is compatible across high and low power VR headsets (Vive and Oculus Quest for example) as well as Hololens and other MR headsets. This allows for the greatest number of users to utilize and contribute to the platform.

- Is a flexible tool capable of enabling users and teams in all aspects of creating their own interactive immersive multi-user environments, prototypes, and software.

This post is not intended to flesh out all aspects of this platform’s design and functionality as that too is an ongoing project. But with this framework let’s turn to the main topic of the post: playing with user-generated programming blocks with nested AI functionality.

In the video we see the creation of a new tool within the platform: a hand tool 1. I want my users and myself to have access to touching and grabbing specific things in the environment so I show how I might go about programming my new hand tool to interact with a door and a steering wheel.

A Buggy Door

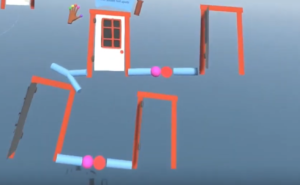

The first thing I wanted when upgrading this island light house was a door that opened. To program this interaction, I used 2 types of programming objects: logic objects and environmental objects.

The logic objects consist of 2 types:

- ‘then/when,’ a straight blue tube

- ‘if,’ a blue tube ending in Y fork

The environmental objects include:

- tools and their parts

- world objects and their parts

Neither of these 2 types of programming objects are fully explored in this narrow example but I wanted to show this version first for clarity. This simple program says

Neither of these 2 types of programming objects are fully explored in this narrow example but I wanted to show this version first for clarity. This simple program says

“If the door is in the closed state, when the pink hot spot from the hand tool intersects the door handle then door goes to open state. If the door is in the open state, when the pink hot spot from the hand tool intersects the door handle then the door goes to the open state.”

There’s a bug in there!

This example is incredibly simple and focuses entirely on a state machine based model, but what happens when we want to introduce a more complex triggering interaction than simply 2 parts overlapping in 3D space?

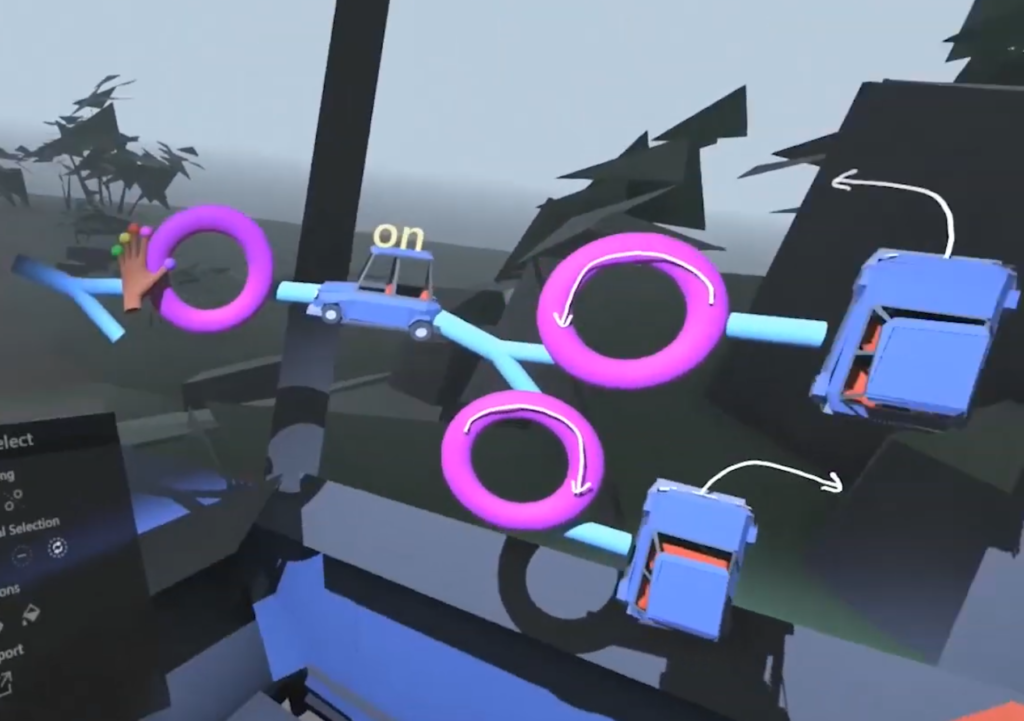

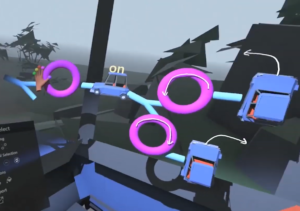

A Steering Wheel

Our steering wheel code uses the same logic and environmental object types used in the door/hand tool script but here we’re adding one new type: a gestural modifier. The gestural modifier is attached to the environmental object of the steering wheel and allows me to add a more complicated interaction into my simple branching code.

Our steering wheel code uses the same logic and environmental object types used in the door/hand tool script but here we’re adding one new type: a gestural modifier. The gestural modifier is attached to the environmental object of the steering wheel and allows me to add a more complicated interaction into my simple branching code.

This modifier technique abstracts away complexity we don’t need to see while programming at this level but zoom in and our machine learning model creation stations are revealed! This method would also work for traditional algorithm nesting as well but I’m going to focus on machine learning.

There are many ways to translate the rotation of one object to the rotation of another, but for the sake of this prototype we will assume 2 things. First, the position of the steering wheel doesn’t matter, it can be used to steer the car from inside or outside the vehicle and in any position. Second, instead of creating a fixed definition of steering in our code, we will gather data about a variety of steering gestures in various positions from ourselves and others. This data will be used to create a model of steering which can be integrated into our declarative code via the simplified representation of the gestural modifier.

I’m not going to go deep on how the machine learning stations work as that is covered in a previous blog post, but just for review this prototype has 3 stations: one for data input and labeling, the second for fine tuning the model via collaboration with the generative algorithm, and the 3rd station in which the system attempts to explain its behavior in order to increase both legibility and ease of fine tuning. The video shows this prototype in its original form which focuses on recognizing and generating a wave gesture instead of a steering gesture but the stations and workflow would be similar.

Emit Bubbles

Now that I’ve established the concept of grouping logic objects with environmental objects which can be internally reshaped by machine learning or other algorithmic modifiers in a mixed reality environment we can let our imaginations run wild about other kinds of modifiers this concept makes possible.

- Have a weather station at home? “If raining at home start rain.”

- Wearing a Hololens? “If I enter the living room while wearing the Hololens, when right hand brushes off the back of left hand play ‘energetic playlist’.”

- In VR with your friends? “If left hand waves, emit sparks. If right hand waves, emit bubbles.”

All unique behaviors created by users for their own silly and serious purposes.

Out and back again

This work continues my attempts to bring the principles of end-user programming, tools which allow people are who are not professional software engineers to create and modify their own software artifacts without knowledge of traditional programming languages, into spatial and embodied computational environments. I care about this work because empowered practice leads to monumental results. It wasn’t a professional show maker that changed ballet forever but 19th century ballerina Marie Taglioni, whose experimentally darned shoes and meticulously practiced feet that lead directly to the invention of both the technique of pointe and the technology of hard toe pointe shoes, which when combined sparked a revolution in the aesthetics of ballet as a whole. These tools are Marie Taglioni’s needles and yarn. Simple but powerful in the right hands (or feet.)

- Side note: A hand tool is a standard way for people to interact with immersive environments and therefore might come standard as part of the platform. The idea here is that the developer in the video is modifying that available tool. I say things like “when the hand grabs the steering wheel” then this other code occurs. The grabbing action is assumed to be pre-programmed into the hand tool with statements like “if Vive or Oculus Quest controllers then grabbing = trigger is pulled” or “if Hololens then grabbing = fist closed when intersecting object”. In future prototypes I’d like to show how even these underlying aspects of tools within the platform could be modified with the same programming interfaces used for giving behaviors too the environments and tools themselves. However, the goal for right now is to show an example of a user creating and customizing a new tool within the platform. A tool which could then be incorporated into a user generated tools library available to other creators.