Introduction

Recently my hands were craving computation. We were waiting on some VR hardware and software that would allow us to prototype and design computational tools with our hands and bodies, but I got impatient and decided to go physical in the mean time. And so I picked up some littleBits kits, and also fired up the 3d printer to prototype some ideas for physical+AR spatial programming, and then at some point while writing this post I threw in some interactive system simulations using Loopy, so get ready to wander through a giant pile of thoughts.

Hover over the “length of this post” node and press the up arrow, and you’ll understand my writing process:

What are littleBits?

The “bits” in littleBits are blocks with individual components, like dials and sliders, LEDs and proximity sensors, that magnetically snap together quickly and easily to make electronics projects.

For example, here’s a simple 3-bit circuit where power connects to a proximity sensor, which is connected to an LED bargraph, indicating how close the camera is while taking this photo:

I made a video documenting my explorations if you’d like to see them in action. It is helpful but not necessary for giving context to the rest of the post:

Thoughts on Augmenting Hands-On Learning with Direct Representations

The thing I was looking to learn about littleBits is how we can use the knowledge we have in our hands, of fiddling and combining and snapping, to explore and iterate on ideas in a way that traditional text-based interfaces on flat screens don’t allow. I want to access modes of thought that are spatial and nonverbal, and that take advantage of physical affordances our hands are adept at multitasking in. One hand changes a dial while another reaches for a new block, and at the same time our mind is free to think in verbal thoughts or higher level abstractions.

Particularly I am interested in spatial tools for working with complex dynamic systems and other mathematical concepts, and this work is currently being inspired by some AI papers I’ve been reading and our group’s ambitions to make the mathematics behind AI systems easier to understand.

My experience in math research is that very few mathematicians actually think in mathematical symbols. We think and communicate in other kinds of representations, and only use the notation for formal things like proofs and papers. The actual representations in our heads are often spatial, they float around and dynamically respond to our imagined poking and stretching, and before recently there was no way to put anything like that into a real, shared, physical space. The best research gets done when we’re waving our hands and drawing on napkins over lunch. But now with AR and VR, now with computers, we can absolutely create spatial representations that respond dynamically and computationally to our pokes and stretches in real time, more like our lunchtime imaginings and less like blackboard proofs.

The old notation is like teaching music students to read scores without being able to listen to a recording, and only a very few hard workers will learn to do it and enjoy the beauty of a symphony, while even fewer will be able to write one. We want to invent the microphone, the phonograph, the piano.

For physical programming or electronics toys like littleBits, I wish I had an AR overlay where I can pull out the state of all the blocks, or see graphs over time, or other information. I want to see representations and abstractions that allow me to work directly with mathematical concepts like derivatives and state spaces without needing the extra steps of translating in and out of traditional math notation.

For example, say I had a motor that spun a wheel, and an arm attached to the wheel moved a sculpture back and forth in a smooth motion, similar to what is shown in the video but more firmly connected. What kind of motion would you expect when you start with something spinning in a circle, and then just take one dimension of its movement?

Readers of this blog are probably more likely than average to know about the intrinsic relationship between circles and sine waves, but most people just don’t have the experience. In my work doing math outreach, I’ve heard the conflicted cries of many a student understanding for the first time what sine waves and circles have to do with each other, and they are happy because they finally understand, and frustrated because their math class failed to teach them this. Many had been shown diagrams of circles with triangles in them that have labels on their legs and hypotenuse, but that’s not helpful if you don’t know how to translate that static visual into a dynamic mental construct. There is something that clicks for many people when they see the relationship in real time:

In a previous project of ours, Real Virtual Physics, we wanted students to be able to not just see these kinds of relationships but to feel them as well.

Real Virtual Physics used VR to overlay mathematical information on the movement of the physical, spatially-tracked controllers. You could grab and place various graphs, such as the controller’s Y position, speed, or acceleration, and then see how the graph responds to your own motion. This way instead of having to look at two things at once to see a relationship, you only have to look at one. You know how the controller is moving because you are moving it. Instead of having to visually multitask, your mind is free to contemplate questions such as why when you shake the controller back and forth in what you think of as “fast”, it is actually not moving fast at all at either end of the movement, yet the acceleration peaks there. Or that to get a smooth high level of fast speed, you might try swinging the controller in circles, and notice that the graph of the y position looks like a sine wave. If you drop or throw the controller, you can see how gravity creates acceleration, parabolas, etc.

And let us not take for granted basic ideas about translating motions and variables and time into a graph with time on the x axis and some other variable on the y axis. Graph literacy is often glossed over in schools as if the visual language and representations should be obvious. It’s not. Standard examples sometimes make things harder: when students are shown a graph of a parabola, and told it can describe the path of a ball being thrown, space and time are easily conflated.

In one of the modes of Real Virtual Physics, the controller drops a trail of points over time, essentially drawing its path through space. There’s a moment in the demo video where the user draws a path that moves up and down, and then goes out of drawing mode and into the mode that allows you to poke at the points and see information about them. The user highlights a spot in the path, and the points light up, along with their corresponding points in the graphical representation. Even though the points in space are very close together, the corresponding points are not. They are on opposite sides of a parabola, because one was dropped on the upswing and one on the downswing. Something about this, when you actually do it, is surprising even though it is obvious in retrospect. A similar moment happens when the path follows a back-and-forth pendulum motion, and the highlighted points on the wavy-looking graph come in sets across every other wave.

Hands-on learning is amazingly effective sometimes, and plenty of children learn deep concepts from educational toys. And yet too often these things completely fail. They are risky and expensive. Somehow we have to get at building and communicating the kind of representations that are actually in people’s heads, and connect our physical tools to those representations. We can’t just put students through the motions of activities and problem-solving and leave it to chance whether they will build a mental model.

A Quick Rant on Math Education and Systems Education

Teaching students to actually understand things is politically nonviable because we don’t know how to directly measure understanding, so we’re left with a you-get-what-you-measure system of measuring things that are measurable, such as calculation ability, and pretending it correlates. With teachers judged on test scores across only a single year’s time, there’s just no incentive to go back and fix shaky foundations rather than push on just a little further. It seems as if the only way out is to find a way to let students build conceptual understanding fast enough that it makes a difference to measurable outcomes within a year, and performs better than spending that time focusing on memorization, cramming, and number-shuffling calculations that allow students to fake the measurable correlates of understanding in the short term, to the detriment of their actual understanding, and often causing trauma and stress where there should be beauty and joy, which should be a crime and makes me more than a little angry.

Maybe with the right tools we can change what we measure, focus on concepts, and leave the calculations to computers. Then we’d have plenty of time to get everyone to the important (and lovely!) concepts from calculus and differential equations. Maybe we’d have time to get people to an understanding of systems, which increasingly rule our lives. Political systems, justice systems, social systems, social media systems, economic systems, environmental systems, and now AI systems.

I think AR and VR will make possible tools that allow anyone to work and think out loud with advanced mathematics, now that we can put mathematical concepts in their natural home in space and time and with live computations. Inventing that language might take a while—after all, 1500 years passed between when the Pythagoreans proved the irrationality of the square root of 2 with barely any math notation at their disposal, and when Arabic numerals finally came to the western world. It had been an elite skill to be able to wrangle Roman numerals into doing simple calculations, but with a simple notational change suddenly anyone could add and multiply. What similar notational changes wait to be discovered? What notation and language ideas are possible only now that we can place computationally alive objects in 3d space around us, instead of being limited to static marks on a page?

I want everyone to have a deep intuitive understanding of systems ideas like that large changes and huge efforts can make no difference at all if the system is created to absorb them. Ideas like stable and unstable equilibrium, intuitions for how exponential and linear growth interact. I want people to be able to feel, and intuit, the kind of ways systems can behave, even if they leave the actual analysis to experts. I want people to be able to form a complex representation in their head when they hear someone talk about capital gains taxes, or viral trending online content, or how global warming might affect global air or ocean circulation.

These general ideas and aspirations are behind a lot of our work, and before I get back to littleBits I’d like to introduce a tool I’m going to attempt to use to illustrate some of the systems.

Loopy: a Tool for Thinking in Systems

Loopy is a tool for thinking in systems made by Nicky Case. Loopy makes it very easy to create and share certain types of simple interactive systems examples, such as this illustration of how Nicky conceptualizes depression and anxiety. Hover over a node to poke an arrow up or down and see what happens!

Loopy is one small foray into the kinds of systems thinking tools I hope will someday be available, and so I’m going to be using it in this post to see what we can learn about how to abstract systems ideas from physical interfaces like littleBits, and also about how to integrate interactive systems tools and systems thinking to shake up things like blog posts, where otherwise I’d just be talking about how important it is to actually poke systems without letting you actually poke a system. (If you’re interested in interactive systems simulation blog posts, also see Parable of the Polygons, by Nicky and myself.)

littleBits + Loopy = physical system + abstraction

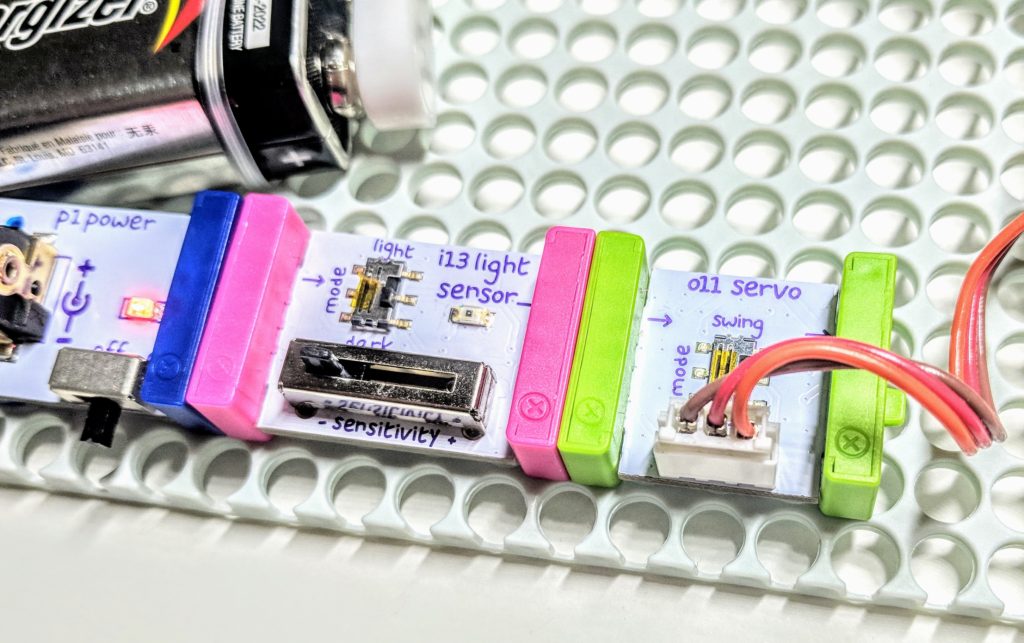

My favorite thing about littleBits the ease and speed of trying out ideas. The process is somewhat combinatorial, with certain possible circuits being obvious. First always comes the Power bit. There’s a certain amount of “Input” bits (which are pink), like sensors and dials. There’s also “Output” bits (in green), such as buzzers, LEDs, servos, and motors. It’s easy to let your hands play with the combinatorics of connecting the power to first an input bit, then an output bit, and seeing how it behaves.

Very quickly you run into feedback loops, as I discovered when first connecting a sound trigger input to a buzzer output, or when connecting a light sensor to the LED output:

One of Loopy’s limitations is that I can’t tell it that light only exists as a positive quantity, and darkness cannot be shined on light to lessen it, but if you’re not trying to break the example it does fine. (I’d love to see a future version of Loopy, or whatever other tool it inspires, that has extended math options!)

When working with electronics it’s easy to accidentally run into unwanted feedback loops, with mic feedback as a well known example. And similarly to darkness not cancelling out light, adding silence doesn’t remove sound, so both are runaway positive feedback loops that go in only one direction and require a big intervention to stop, usually some form of turning it off and on again.

But could I use littleBits to make other kinds of feedback loops, or more complicated systems?

In the next example, the power bit feeds into a light sensor, that then connects to a servo. The light sensor will let through an amount of power that depends on how much light hits it. It can be set to like either light or darkness, and there’s a sensitivity slider. This in turn sets the position (not speed in this case) of the servo.

The catch is that I’ve attached a feather to the servo, which is capable of shading the light sensor. Now we have a system where the light sensor affects the servo and the servo affects the light sensor, and with just this simple setup we can observe the basic systems phenomenons of positive and negative feedback loops, stable and unstable equilibria, and oscillation due to over-sensitivity and delay.

Here’s one of the possible systems we might encounter, depending on how we set the sensor:

In the above example there are two opposite self-reinforcing feedback loops: if the sensor starts getting more light, it pushes the shade away, giving it more light, pushing the shade further away, and so on until the servo runs out of range. Whereas if the sensor starts getting more shade it will move the feather closer, creating more shade, and so on until the feather is completely on top of the light sensor.

Both of those end states, full light or full shade, are stable. At the extreme points, a small poke at the system will be absorbed. It takes a large shove, such as manually covering the light sensor when the feather is far away, to flip it to the other state. In the above interactive example you can try poking at the system and see if you can click fast enough to flip it once it is at either extreme.

I want people to be able to feel the state space, the way the unstable middle ground has this peak that falls to either side as sure as gravity. If you poke a ball just a little up a hill, it will roll right back down to where it was. It won’t suddenly leap up and over to the other side, no matter how many little pokes you give it, unless you do all the pokes nearly at the same time. (Loopy itself doesn’t quite function like this, but shhh that’s not the point)

Now here’s the case where the sensor is flipped, and it is darkness that pushes the feather away:

This is a different kind of feedback loop. It hovers around a theoretical stable middle ground, and because of the delays in feedback it oscillates. You could think of the light sensor as correcting the position of the feather when it is too close or too far, but it has a tendency to over-correct and swing to the other side. The world is full of systems that develop oscillations due to poorly timed over-corrections, and it is possible for oscillations to increase in size and grow out of control, eventually breaking the system.

I decided to play with making an over-correcting feedback loop example to compare to the kinds of feedback loops we’ve already looked at, and here’s what I came up with:

(Y’know that meme “I’m in this picture and I don’t like it”?)

I think I’m getting the hang of Loopy! But back to littleBits:

I also made a version of the littleBits oscillating shadowbot where the servo connected to a long flappy piece of paper, allowing for more time delay and chaotic random effects, and attached a paint brush in the spirit of art-based research practice:

While the results may not be a masterpiece, looking at them remind me of the movements that made them, and evoke within me the systems ideas that led to its creation. I can hear the servo whining, the paper flapping, and the brush wildly slapping the paper, much faster than I had expected when I imagined how the system would work. What seemed so simple in theory quickly proved itself to be out of my control, flinging paint everywhere, and my surprise I momentarily forgot the off switch and instead gaped helplessly and hopefully that it would slow down, as if the speed of the motor were set by a dial or variable rather than the dynamics of the system itself combined with physics, and then I burst into laughter at the absurdity of it all. Would that everyone could create and display such reminders of their mathematical systems experiences and understanding!

I’d like to explore creating more complex and chaotic systems with littleBits, by having more sensors interact with each other through the movements of servos and motors.

Final Thoughts

Aside from the littleBits systems explorations, it was generally fun to play with the order of input blocks and see how that changes things, which was extremely easy to do with the magnetic connections. Attach the dimmer before the oscillator and speaker, and the dimmer changes the pitch. Attach the dimmer between the oscillator and speaker, and it changes the volume. There’s a bit that functions like a tiny keyboard, and if you attach a dimmer before it, you can tune it to various microtonal scales. And if you attach servos or motors after musical bits, they will move to the music, which is my favorite!

Sometimes I had success improvising with how to combine blocks, and observing the result. Other times I’d find myself having an idea first, and then thinking “hey, I could make that in littleBits in about 30 seconds”. It’s a good sign when a tool allows for implementing planned ideas as well as improvisational play. The kits also come with instructions for specific projects, which I imagine is useful for kids just starting out and without a good understanding of circuits, sensors, or robotics to start with. I have no idea whether tools like these would be effective for kids without the help of a knowledgeable adult, but they certainly were fun for me!

The physicality of littleBits is both its strength and weakness. It’s expensive, your supply of blocks is limited, and making new projects means destroying your old ones. Getting at the internal states of the blocks is impossible; you can only see their effects on other blocks. If they were in VR or AR instead, you’d be able to copy and paste, add infinite blocks of any type, modify the blocks, and see what’s going on inside them. You could save projects, modify them, share them. And you could still take advantage of your hands’ ability to grab and arrange things in 3d space.

Virtual blocks would also be able to modify themselves to fit the level of the user. Physical educational toys tend to have the problem that either they are easy to use but don’t have much depth, or they are powerful but have a high barrier to entry and for many kids will sit as an expensive pile of junk in a corner forever. It can be overwhelming to open a box full of a dozen different kinds of blocks, many of which have their own sliders and settings on them, but a virtual version or the help of a virtual overlay could focus down to just a couple to start with. Rather than a project seeming to fail because a sensitivity or pitch adjustment was way off, a virtual block could automatically adjust itself based on environmental information, and once a kid had a feel for what the block does then they could open up the block and manually change the settings if they choose. Virtual projects could start partially completed, or entirely completed, allowing a learner to fiddle with a working system before starting from scratch.

In a virtual version you’d miss out on some of the physical affordances that make fingers happy, like dials, switches, and the pleasing snappiness to the connections. And of course without physical servos you can’t make projects that animate a physical sculpture, paint with a real paint brush, or draw all over your real kitchen floor with a real sharpie.

And that’s why we decided to prototype what it might be like to combine virtual 3d spatial programming blocks, like in our previous project designing a spatial programming language for VR, with physical programming blocks with physical affordances. So we put our 3d printer to work and let our hands see what they wanted, and what they wanted was to be able to pull the “spirits” out of physical bits of code in order to copy, place, modify, and reuse them. We can have the best of both worlds. Real and virtual. Physical systems and abstractions. Everything connected, everything pokeable and modifiable. More on that next time!