Introduction

We like the idea of being able to do computation and programming in a natural way, in real time, in space in front of us. We like using the knowledge in our hands and bodies to think faster and better than our minds could do with words alone. We believe new paradigms for spatial understanding and live interactive computation environments will greatly expand human ability to understand complex systems, from giant code bases to machine learning algorithms and beyond.

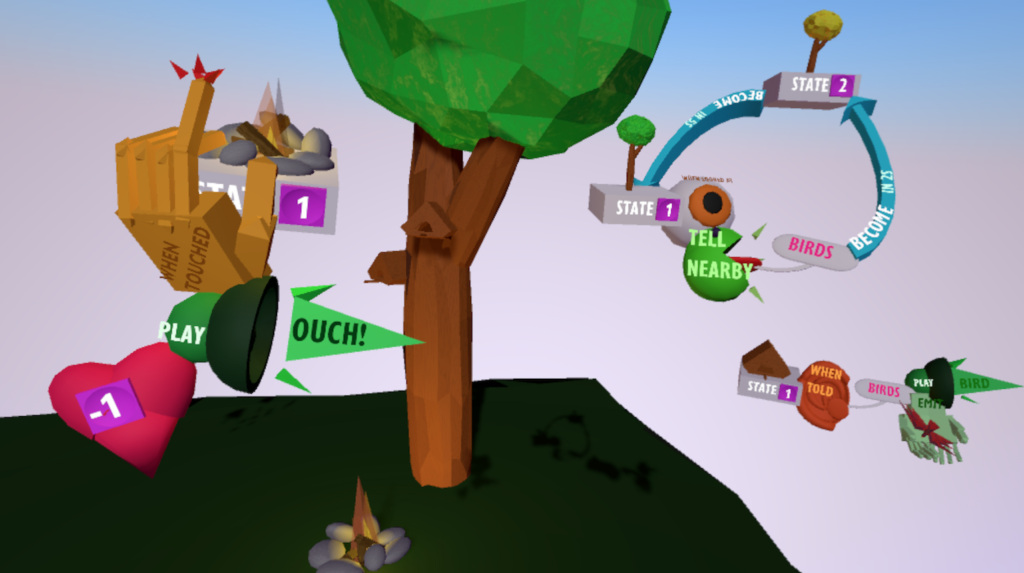

In the past we’ve researched designs for spatial programming in AR/VR, using code blocks like this:

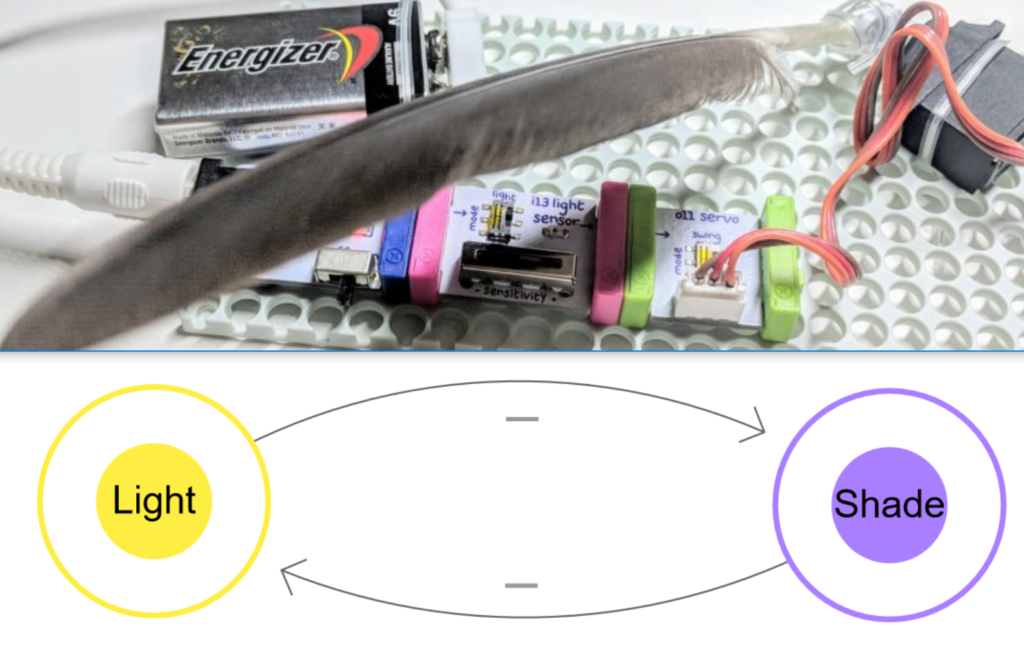

In my latest post I wrote about the electronics toy littleBits and hands-on explorations of dynamic systems, which looked like this:

We followed up on that work with experiments in designing systems that combine physical and virtual components, to maintain all the affordances of physical objects while also gaining the advantages of scalability, flexibility, and live computation that virtual components offer.

1. 3D Printed + AR Code Blocks

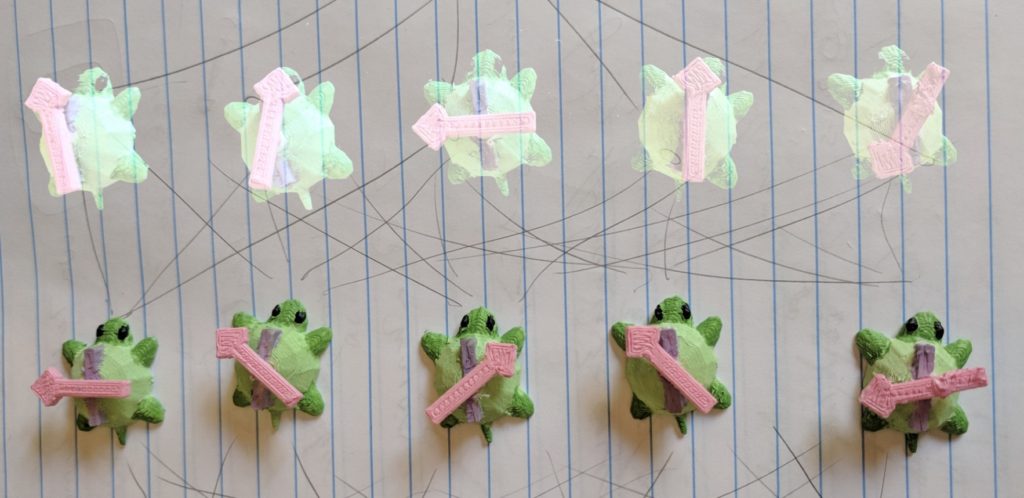

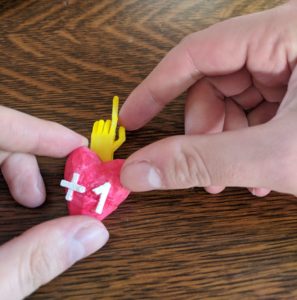

We tried 3D printing some of our virtual code blocks :

It was somewhat strange to have these blocks, which we’d been working with in AR and VR for years, suddenly be physical objects that you can touch and feel. But it very quickly became natural to move and arrange them together into small chunks of code, like the above “when you touch a cake, get +1 health”.

But then what?

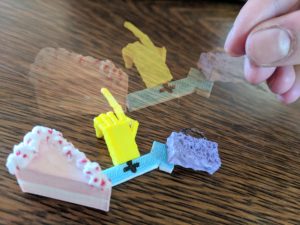

We mocked up some ideas of what it would be like to be able to make AR copies of physical bits and place them around the home. This way you could fiddle with the physical code blocks in an exploratory way with your hands in a natural comfortable position in front of you, and perhaps you come up with the behavior “when touched, add a cake to your bag”. You then can pull the “spirit” out of your physical piece of code:

Now you can copy, scale, modify, or attach this behavior to something else in your space. Perhaps I want to add this code to a real cake that’s in my fridge, so that when I touch it I collect a virtual cake.

I can re-use my physical code blocks for a different chunk of code, without destroying my work. I don’t need to 3D print (or buy) more and more pieces in order to gain access to large project creation.

When we first started experimenting with AR code blocks in 2017 (which Evelyn writes more about in this post from 2017), we were using phone-based AR that tracked physical paper markers. We arbitrarily chose a library of 8 blocks out of our vast VR code block library to play with :

The AR code blocks only showed up when seen through the phone, but they did have a physical component. We could play with moving around the physical paper bits in various orientations. They might not be the 8 primitives you’d choose if you were trying to implement a programming language for actual use, but they were good for our purposes, which was seeing how it felt to have these blocks in space, interacting with physical objects.

We then brought the same code blocks into the hololens, where they were purely virtual. There was nothing to touch, but we were no longer limited by size or by gravity.

2. Turtles and Manipulable Primitives

We began our physical code block explorations by 3d printing this same set of code blocks to see how that felt, but we realized it was time to move to a new set of blocks. There’s some limitations we’re stuck with in physical space, like that physical blocks can’t be oriented any-which-way in midair, can’t freely intersect with and pass through each other, and unless they are robotic they are not computationally alive. But printing them out also made them seem very much like static chunks of plastic, which they didn’t need to be!

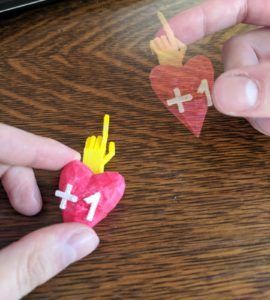

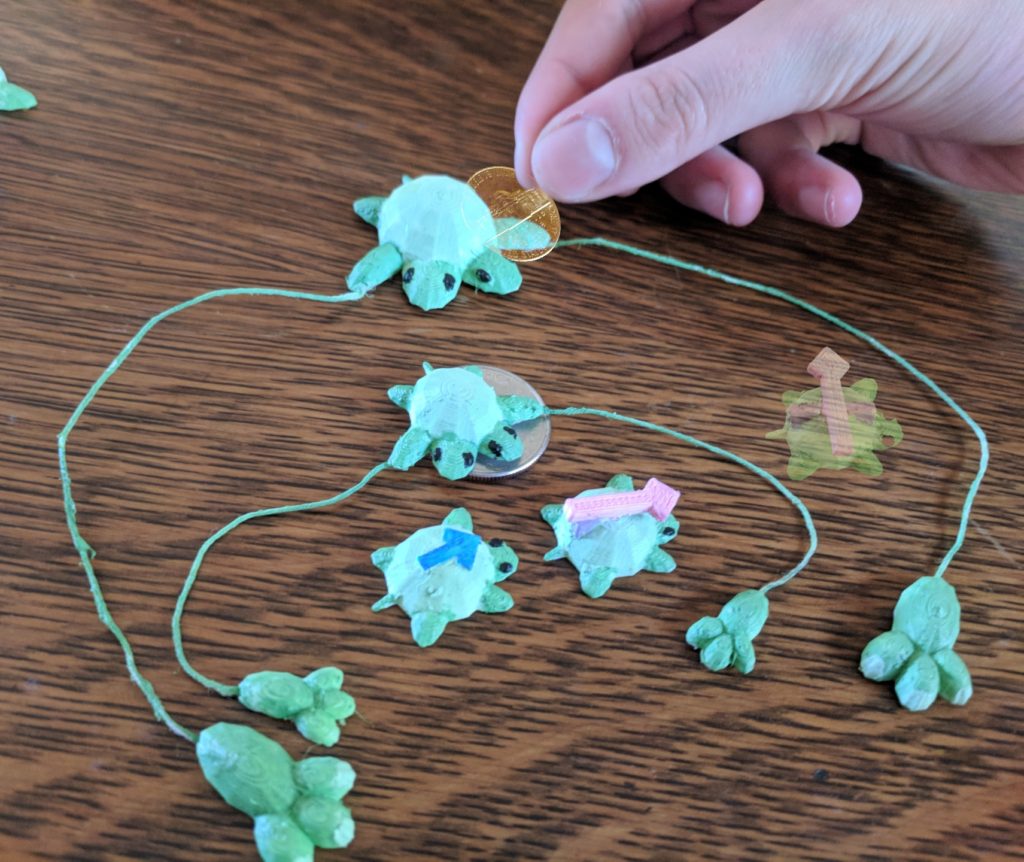

So for our next exploration, we made some LOGO-inspired turtle blocks. The basic blocks give directions that tell a turtle how to move in space. The “move forward” block is a simple static arrow in blue (on a turtle), but the “turn” block is more interesting:

In LOGO, as in most languages, if you want to turn by some angle you type in some number that represents an angle when interpreted correctly as radians or degrees. Instead, our blocks have moveable arrows that let you set the angle as an actual angle.

LOGO also allows loops, which we made into turtle hugs, letting you know to loop whatever is in the bounds of the hug rather than having disconnected floating parentheses that hopefully you paired correctly. Here’s how to draw a square by repeatedly moving forward and turning 90 degrees:

There’s something appealing about representing angles as angles, and now we have a list of other basic mathematical objects that it would be great to have physical manipulables for. We envision being able to see the changes in your code represented in real time as you play with physical manipulable primitives, whether of angles, imaginary numbers, or tensor products. With AR you can see the computation happening in space right before your eyes while your hands make the changes eyes-free, allowing you to get a feel for how mathematical objects behave.

Imagine being able to see the turtle’s path change as you change this angle in real time, not just changing a number that represents an angle but changing an actual angle, going from something like a circle to various stars:

Through these examples we hope to better consider what a programming language could be in the first place. We don’t know what works. This is why we’re exploring various ideas through small experiments that are aimed only at learning new possible directions, rather than having tried to design and implement an entire functioning VR programming language that only slightly extends existing blocks-based languages, as we might have been tempted to do when we first started.

3. Letting Go of Order

Our first inspiration was blocks programming languages such as Scratch and Etoys, and thinking about how nice it would be to be able to grab and manipulate programming blocks by hand. Once we started putting blocks in space we got to consider new questions. We don’t need to stick to a linear order, or to have them lock together in perfect alignment. Maybe the natural-feeling primitives of programming in space are different from the programming primitives of text-based programming. Maybe there’s syntactical information in the angle two blocks are put together, or how much they overlap. Our goal was not to design a 3d interface for traditional programming languages, but a natively 3D programming paradigm. What assumptions about programming can we let go of?

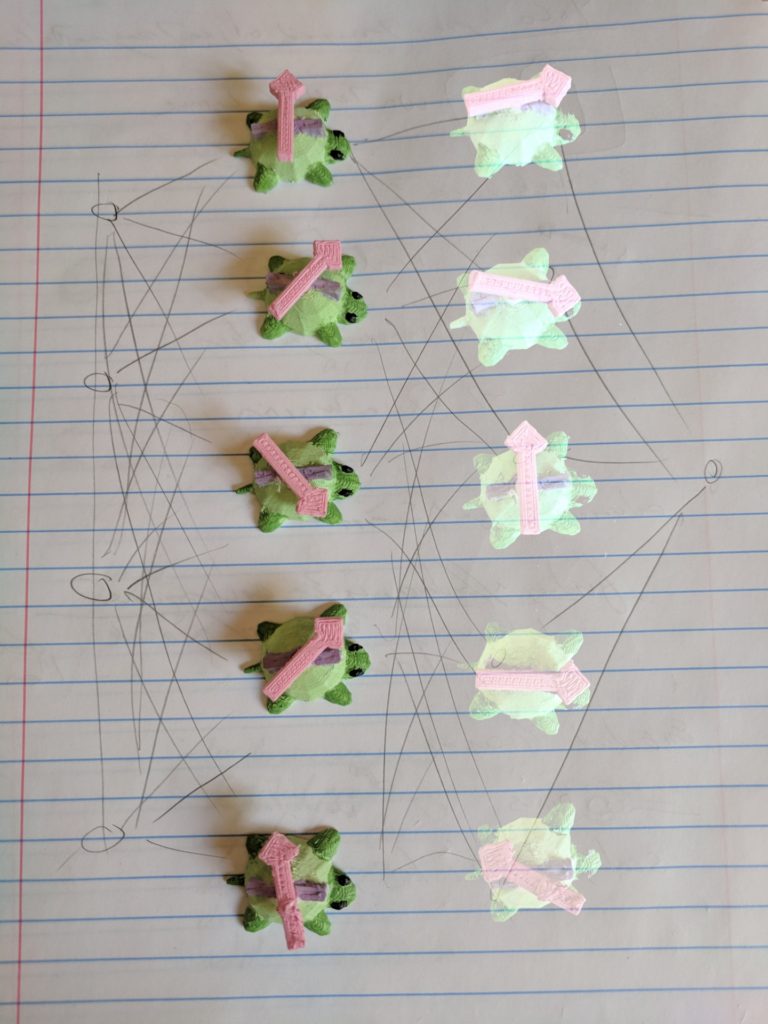

Sometimes order isn’t supposed to matter, like when assigning properties and behaviors to various objects. Say I want to make a game where I program various objects in my house to do different things when you touch them. I might combine two blocks to make a “when touched: +1 health” code spirit:

Now I can copy it and attach it to a few healthy foods:

In traditional programming languages the order can matter for resolving conflicts, but the results often end up feeling arbitrary or buggy. Like, say I attach both a +1 health and a -1 health code spirit to the onion, and then I start playing my game and get down to my last health point. What happens when I touch the onion? If the -1 health code executes first, I lose the game before the +1 health kicks in. But if the +1 health executes first, I’m in the clear. Traditional programming languages train us to think it ultimately has to be one or the other, or if it’s functionally not, it’s only because of the order of something else, like whether the game checks the health total before or after the second code block runs.

Artificially imposed order and linearity suppress natural interaction between independent parts. Spatial programming languages may be able to better model complex dynamic systems, including certain kinds of AI. The most natural thing may be to use an AI compiler in the first place, one that can interpret what the +1 and -1 code bits “really mean” together, rather than taking them literally and in some arbitrary order.

4. Everything is Code, Everything has Meaning

We’ve been assuming we’re working with a near-future AR device that not only lets us place virtual code blocks in our spaces, but also has the object recognition capabilities that allow it to recognize and virtualize physical code blocks as well as other everyday objects. Objects contain syntactic information, with some more obvious than others, and we imagine an AI compiler that can be taught to interpret this.

For example, you may have noticed the coins in previous turtle examples. The compiler might understand that a penny attached to a movement block means “move forward 1 step”, and that a quarter attached to an angle that’s close to 90-degrees indicates it is meant to be a quarter turn.

We can mix physical objects and virtual ones to make bigger chunks of code, or to help us out if we don’t have the right objects available.

LOGO can only take our experiments so far, as it was built to be a simple, ordered, domain-specific language. But maybe we can take what we learned about manipulable primitives and apply it to more complex applications. We can start by shoving our turtle blocks into places they don’t quite belong, like to represent the activation of neurons in a neural net:

…and work from there.

Or, for example, I tried shoving some simple turtle movement code onto a virtual sculpture, to program it to move in a circle (or approximate circle, by repeatedly moving forward at a slight angle):

This example helps us think about how code should live in space, near and on the things it affects. The shark sculpture should be able to have movement code inside it where it lives, not off on some separate rectangle in another program.

5. Gestural Programming Elements

Turtle programming is good as an exercise, but the language itself is a bit clunky and unnatural for an application like the shark movement code just mentioned. If I want a perfect circle, I should have one readily available within reach, perhaps in one of the many pockets of my virtual tool belt. I use circles often enough that I’d probably keep one hanging on my hip like Xena, Warrior Princess and Mathematician, ready to throw in instinctive reaction at any circle problems that come up in my work.

But for a movement path, perhaps I’d rather simply draw something that’s approximately a circle, or walk in a circle, or move an object around, and then just grab that path and toss it over to the shark. This path would be a more natural shape, and would also include the speed I want the shark to move, in a very direct and embodied way rather than creating the components separately and fiddling with them to get them right.

Many people’s formative experience in VR is when they first use a drawing program and see the path of their hand through time show up in space in front of them. It’s utterly magical and utterly natural. Paths in space-time seem like a basic functionality every native spatial computing environment should have available, same as points in space and orientations.

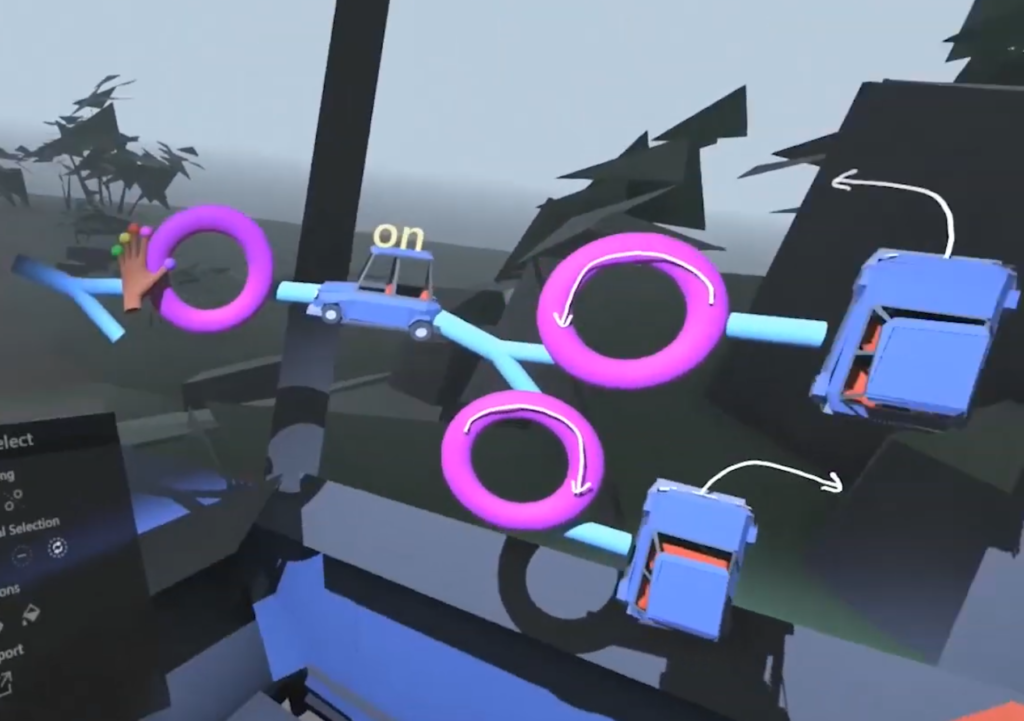

In M’s most recent post they wrote a bit about the idea of gestural programming blocks, where you can use machine learning to identify and recognize a particular gesture such as turning a steering wheel, opening a door, or whatever other behavior you want to work with in an application. This follows some of Jaron Lanier’s ideas about using AI to allow programming by example. We don’t have an architecture to program an entire system by example, but we propose some ideas for programming particular elements by example, so that a machine learning algorithm can create an object that represents a particular behavior. Then we can combine those gestural objects with other spatial programming blocks. We also propose tools to look inside that machine learning model and modify it to perform the way we want it to, also with the help of spatial tools.

For our shark example, perhaps I create a few examples of swimming motions for my shark sculpture, and feed them to a gestural block that creates variations on those examples. I can tell the block which variations I like or don’t like as I notice them throughout my day, if it starts swimming upside-down for example. I could go inside the block and really refine the model by giving it new examples and judging the results, especially if there’s other functionality of my code that depends on reasonable shark behavior. Otherwise I could always just leave it alone and let my shark do its weird shark thing.

6. Programming Language Wish List 🎋

To recap, we started in 2016 thinking about the potential of spatial programming languages as:

- 🔲 Blocks programming languages, but in VR in 3D

- 💃 Take advantage of embodied cognition to:

- 👏 Be better at putting blocks together

- 🗺 Create mental maps of large systems

Since then, we’ve added:

- 🔀 Take advantage of nonlinear order of programming elements

- 🙃 Orientation, relative position, and scale of programming blocks all matter to their meaning

- 👓 Can use AR, can apply AR blocks to physical items

- 👉 Can use physical code blocks in the real world

- 🖐 Use hand knowledge and physical affordances

- 🕹 Code blocks can be dynamic, with dials, switches, degrees of freedom, and maybe eventually robotics

- 👁 Use machine vision to identify and track objects

- 👻 Can pull the AR “spirit” out of physical code blocks, and combine AR with the physical

- 🎲 Can integrate any object (or its spirit)

- 📊 Compiler needs a way to access data about objects and their associated meanings

- 🍵 A program may compile differently depending on continuously changing cultural context

- 🍆 Code may compile differently depending on who made it and the meaning they attach to certain objects

- 🗣 This functionality would make some use cases difficult, but potentially expand the power and real-time expressiveness of computational tools more than exponentially. It’s more like actual language: its power goes hand in hand with its flexibility and associated potential for miscommunication

- 📊 Compiler needs a way to access data about objects and their associated meanings

- 🌌 For native 3D space-time tools, we probably should have some basic functionality for basic 3D-space time things:

- 🌠 Paths in space-time

- 👋 Gestural objects

- 👩🔬 Math/Physics primitives:

- 🤸♀️ Orientations

- 🥌 Momentums

- 📐 Scale, matrix transformations, linear algebra in general

- 🚗 Probably calculus too

- 🤖 Machine learning models are just another useful standard math object

We’ve been calling the latest iteration of this vision “Spirit”, after the code spirits of physical objects, and also of the general idea that there is some meaning to a chunk of code that exists independently of the primitives that make it up. We imagine an AI compiler that can interpret the spirit of the code instead of the literal code chunks, but in the end it’s not the AI that is the ghost in the machine. It is people who create and share the meanings they find in things, whether through words or actions, whether by posting things online or doing specific data tasks.

Conclusion, Humans and Language

Our version of AI-compiled code is not one where computers start writing their own code, outpace humanity, and reach a technological singularity. Machine learning algorithms borrow and collage together our human-created meanings of things, and when we leverage them for computational tools we give ourselves more power and expressivity to create even greater human meaning. This in turn makes our AI tools better, but only because humanity is greater.

Our imagined AI compiler will never be able to do whatever it is a person does when they understand an existing piece of code or imagine a new one. All AI can do is a lot of very simple math very very fast. But there’s nothing quite like fast math on good data! It may start with simple experiments, but eventually it should be possible to build a system that can bootstrap itself into a functioning AI-compiled physical spatial programming language, as long as it has the continuing input of human programmers with expressive coding skills.

Natural languages get created though collaboration, not just of people talking out their interpretations of the world with each other in words and text, but also through expressing things with the aid of tools, whether artistic, cultural, or computational. The computational tools of the future could help us think and feel out loud and in real time, not just through the words or text our brain can think of but also with the movements our bodies and hands can express in the context of our surroundings.